Aion Overview¶

Aion is a Atos/Bull/AMD supercomputer which consists of 354 compute nodes, totaling 45312 compute cores and 90624 GB RAM, with a peak performance of about 1,88 PetaFLOP/s.

All nodes are interconnected through a Fast InfiniBand (IB) HDR100 network1, configured over a Fat-Tree Topology (blocking factor 1:2). Aion nodes are equipped with AMD Epyc ROME 7H12 processors.

Two global high-performance clustered file systems are available on all ULHPC computational systems: one based on GPFS/SpectrumScale, one on Lustre.

Aion Compute Aion Interconnect Global Storage

The cluster runs a Red Hat Linux operating system. The ULHPC Team supplies on all clusters a large variety of HPC utilities, scientific applications and programming libraries to its user community. The user software environment is generated using Easybuild (EB) and is made available as environment modules from the compute nodes only.

Slurm is the Resource and Job Management Systems (RJMS) which provides computing resources allocations and job execution. For more information: see ULHPC slurm docs.

Cluster Organization¶

Data Center Configuration¶

The Aion cluster is based on a cell made of 4 BullSequana XH2000 adjacent racks installed in the CDC (Centre de Calcul) data center of the University within one of the DLC-enabled server room (CDC S-02-004) adjacent to the room hosting the Iris cluster and the global storage.

Each rack has the following dimensions: HxWxD (mm) = 2030x750x1270 (Depth is 1350mm with aesthetic doors). The full solution with 4 racks (total dimension: dimensions: HxWxD (mm) = 2030x3000x1270) with the following characteristics:

| Rack 1 | Rack 2 | Rack 3 | Rack 4 | TOTAL | |

|---|---|---|---|---|---|

| Weight [kg] | 1872,4 | 1830,2 | 1830,2 | 1824,2 | 7357 kg |

| #X2410 Rome Blade | 30 | 29 | 29 | 30 | 118 |

| #Compute Nodes | 90 | 87 | 87 | 90 | 354 |

| #Compute Cores | 11520 | 11136 | 11136 | 11520 | 45312 |

| R_\text{peak} [TFlops] | 479,23 TF | 463,25 TF | 463,25 TF | 479,23 TF | 1884.96 TF |

For more details: BullSequana XH2000 SpecSheet (PDF)

Cooling¶

The BullSequana XH2000 is a fan less innovative cooling solution which is ultra-energy-efficient (targeting a PUE very close to 1) using an enhanced version of the Bull Direct Liquid Cooling (DLC) technology. A separate hot-water circuit minimizes the total energy consumption of a system. For more information: see [Direct] Liquid Cooling.

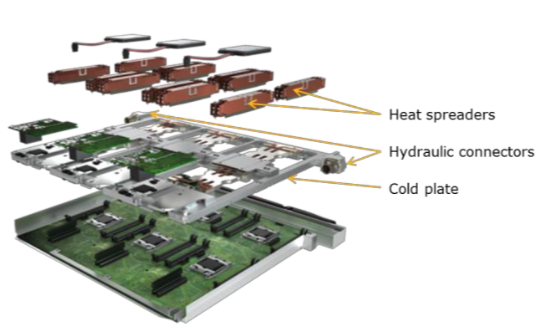

The illustration on the right shows an exploded view of a compute blade with the cold plate and heat spreaders.

The DLC1 components in the rack are:

- Compute nodes (CPU, Memory, Drives, GPU)

- High Speed Interconnect: HDR

- Management network: Ethernet management switches

- Power Supply Unit: DLC shelves

The cooling area in the rack is composed of:

- 3 Hydraulic chassis (HYCs) for 2+1 redundancy at the bottom of the cabinet, 10.5U height.

- Each HYCs dissipates at a maximum of 240W in the air.

- A primary manifold system connects the University hot-water loop to the HYCs primary water inlets

- A secondary manifold system connects HYCs outlets to each blade in the compute cabinet

Login/Access servers¶

- Aion has 2 access servers (256 GB of memory each, general access)

access[1-2]- Each login node has two sockets, each socket is populated with an AMD EPYC 7452 processor (2.2 GHz, 32 cores)

Access servers are not meant for compute!

- The

modulecommand is not available on the access servers, only on the compute nodes - you MUST NOT run any computing process on the access servers.

Rack Cabinets¶

The Aion cluster (management compute and interconnect) is installed across the two adjacent server rooms in the premises of the Centre de Calcul (CDC), in the CDC-S02-005 server room.

| Server Room | Rack ID | Purpose | Type | Description |

|---|---|---|---|---|

| CDC-S02-005 | D02 | Network | Interconnect equipment | |

| CDC-S02-005 | A04 | Management | Management servers, Interconnect | |

| CDC-S02-004 | A01 | Compute | regular | aion-[0001-0084,0319-0324], interconnect |

| CDC-S02-004 | A02 | Compute | regular | aion-[0085-0162,0325-0333], interconnect |

| CDC-S02-004 | A03 | Compute | regular | aion-[0163-0240,0334-0342], interconnect |

| CDC-S02-004 | A04 | Compute | regular | aion-[0241-0318,0343-0354], interconnect |

In addition, the global storage equipment (GPFS/SpectrumScale and Lustre, common to both Iris and Aion clusters) is installed in another row of cabinets of the same server room.