Workflow

ULHPC Workflow¶

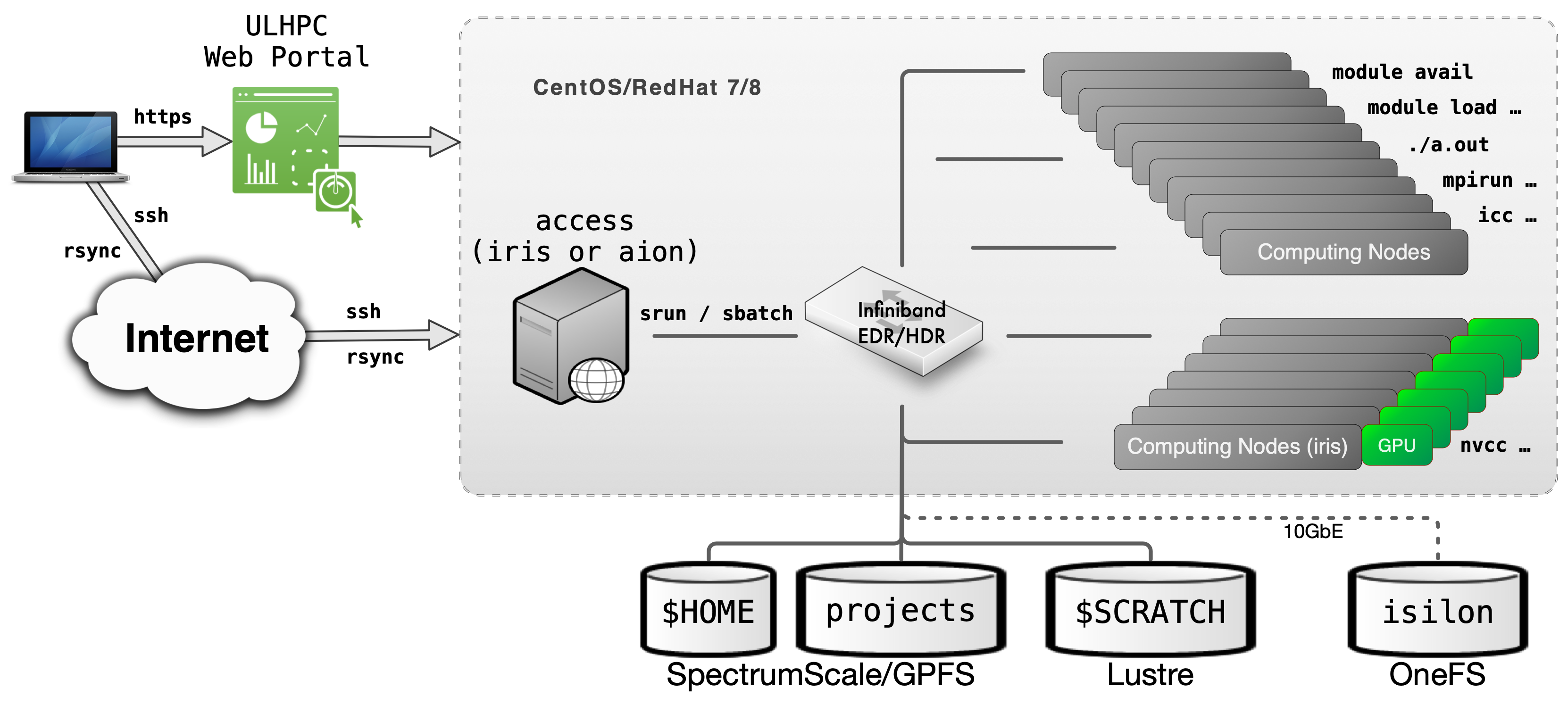

Your typical journey on the ULHPC facility is illustrated in the below figure.

Typical workflow on UL HPC resources

You daily interaction with the ULHPC facility includes the following actions:

Preliminary setup

- Connect to the access/login servers

- This can be done either by

ssh(recommended) or via the ULHPC OOD portal - (advanced users) at this point, you probably want to create (or reattach) to a

screenortmuxsession

- This can be done either by

- Synchronize you code and/or transfer your input data using

rsync/svn/gittypically - recall that the different storage filesystems are shared (via a high-speed interconnect network) among the computational resources of the ULHPC facilities. In particular, it is sufficient to exchange data with the access servers to make them available on the clusters - Reserve a few interactive resources with

salloc -p interactive [...]- recall that the

modulecommand (used to load the ULHPC User software) is only available on the compute nodes - (eventually) build your program, typically using

gcc/icc/mpicc/nvcc.. - Test your workflow / HPC analysis on a small size problem (

srun/python/sh...) - Prepare a launcher script

<launcher>.{sh|py}

- recall that the

Then you can proceed with your Real Experiments:

- Reserve passive resources:

sbatch [...] <launcher> - Grab the results and (eventually) transfer back your output results using

rsync/svn/git