Fast Local Interconnect Network¶

The Fast local interconnect network implemented within Iris relies on the Mellanox Infiniband (IB) EDR1 technology. For more details, see Introduction to High-Speed InfiniBand Interconnect.

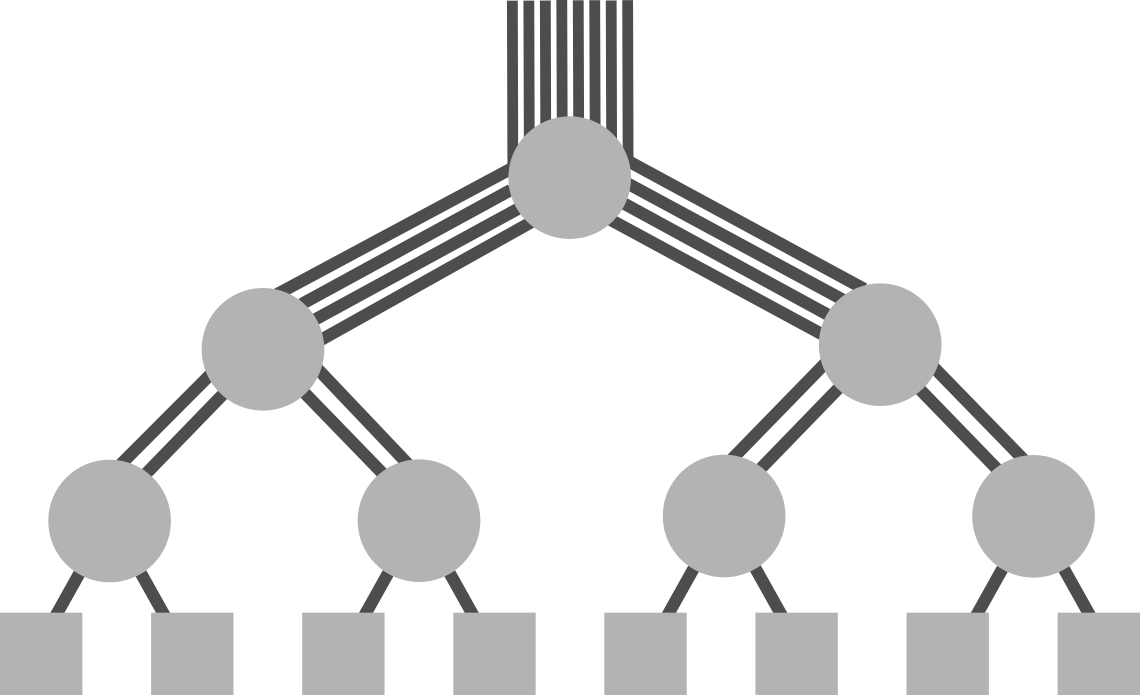

One of the most significant differentiators between HPC systems and lesser performing systems is, apart from the interconnect technology deployed, the supporting topology. There are several topologies commonly used in large-scale HPC deployments (Fat-Tree, 3D-Torus, Dragonfly+ etc.).

Iris (like Aion) is part of an Island which employs a "Fat-Tree" Topology2 which remains the widely used topology in HPC clusters due to its versatility, high bisection bandwidth and well understood routing.

Iris 2-Level 1:1.5 Fat-Tree is composed of:

- 18x Infiniband EDR1 Mellanox SB7800 switches (36 ports)

- 12x Leaf IB (LIB) switches (L1), each with 12 EDR L1-L2 interlinks

- 6x Spine IB (SIB) switches (L2), with 8 EDR downlinks (total: 48 links) used for the interconnexion with the Aion Cluster

- Up to 24 Iris compute nodes and servers EDR connection per L1 switch using 24 EDR ports

For more details: ULHPC Fast IB Interconnect

Illustration of Iris network cabling (IB and Ethernet) within one of the rack hosting the compute nodes: